Decoding QR codes using neural networks

In this example, a neural network (NN) will decode QR codes with the text being up to 11 characters long.

This jupyter notebook is available here.

It is worth mentioning that using neural networks for QR decoding is not an optimal approach. There are well defined and simple algorithms to achieve these tasks. At the same time, due to ease of training data collection, QR codes provide a good practical experience for building NNs.

Decoding a QR code may be defined as a classification task. The result is a combination of outputs of eleven classifiers, one for each position. Each classifier tries to predict a symbol for a given spot. If an encoded text is shorter than eleven characters, a special symbol end-of-input (EOI) should be produced for empty positions. In this particular example, all texts are lowercase characters a..z and space. The total number of classes to be predicted is 28, which is 26 letter classes, one for space and one for EOI. For example, a string ‘hello’ may be represented by classes: ‘h’, ‘e’, ‘l’, ‘l’, ‘o’, ‘#’, ‘#’, ‘#’, ‘#’, ‘#’, ‘#’. As a minor optimization, a working example could abandon symbols classification as soon as EOI class is detected since it is by definition that EOI may show up only at the end of the output.

p.s. Why is the supported size up to 11 characters (and not 10 for example)? This is to be able to decode the ‘hello world’.

1. Initialization

To run the example, the latest Anaconda distribution is used. It comes with all the main libraries. QR code library has to be installed via Anaconda package manager - “r-qrcode” is the package to add.

# import all required libraries

import random

import string

import qrcode

import numpy as np

import pandas as pd

import matplotlib.pyplot as plt

import tensorflow as tf

from tensorflow import keras

from tensorflow.keras import layers

from tensorflow.keras.callbacks import EarlyStopping

from tensorflow.keras.callbacks import ModelCheckpoint

# the next two lines fix a runtime bug on mac os

import os

os.environ['KMP_DUPLICATE_LIB_OK']='True'As it was mentioned above, input texts are up to eleven characters long. An input may contain lower case letters and spaces. End-of-input is a special class to assign to empty positions. Below are definitions for constants used to build and train NNs.

LETTERS = string.ascii_lowercase + ' ' # letters and space

EOI = '#' # end of input

MAX_SIZE = 11 # max input/output size

ALL_CHAR_CLASSES = LETTERS + EOI # all available classes# define a function for a QR code generation

# box_size is an option to manage size of an output image

def make_qr(text, box_size = 1):

qr = qrcode.QRCode(

version=1,

box_size=box_size,

border=0)

qr.add_data(text)

qr.make(fit=True)

return qr.make_image(fill_color="black", back_color="white")# generate a sample image

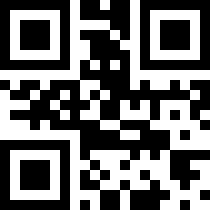

make_qr("hello world", box_size=10)

In the above example, the box size is set to 10 to make details of the image look larger. It makes sense to minimize the size of an image being fed into an NN. To do so, all QR codes below are using a box size of 1. The size of the generated images is 21x21 pixels.

print('FFNN input size is', make_qr("hello world").size)FFNN input size is (21, 21)

The training data set needs to be generated. The function below creates a given number of examples with length constraints.

The output is uniformly distributed. This applies to both sizes and characters in texts.

The output contains a NumPy array of all images and corresponding texts. Image data is already formatted to be fed to a NN. The output texts will be converted to the required format on the go.

# a function to generate a train data set

# output: (numpy array of images, list of corresponding texts)

def generate_dataset(n_of_samples, min_size = 1, max_size = 11):

data = []

labels = []

report_step = int(n_of_samples * .1)

report = report_step

print("Generating")

for i in range(n_of_samples):

if i == report:

print("Done:", report / report_step * 10, "%")

report = report + report_step

size = random.randint(min_size, max_size)

s = ''.join(random.choice(LETTERS) for i in range(size))

img = make_qr(s)

assert img.size == (21, 21)

qr = np.asarray(img, dtype='float')

data.append(qr)

labels.append(s)

print("Done:", "100", "%")

return (np.asarray(data), labels)The training set is 50k. This is an arbitrary number. It was chosen for code to be relatively fast on an average laptop. But, of course, a larger data set will lead to better accuracy.

(training_data, training_labels) = generate_dataset(50000)Generating

Done: 10.0 %

Done: 20.0 %

Done: 30.0 %

Done: 40.0 %

Done: 50.0 %

Done: 60.0 %

Done: 70.0 %

Done: 80.0 %

Done: 90.0 %

Done: 100 %

2. Building a feed-forward neural network (FFNN) to estimate encoded text size

To get the ball rolling, the first FFNN will extract a text length from a QR image. This problem may be defined as a classification one. The input is a QR image. The output is one of 11 classes, one for each possible size.

The training set already has all the QR images. Size labels should be created based on original texts:

# create a list of sizes based on original text labels

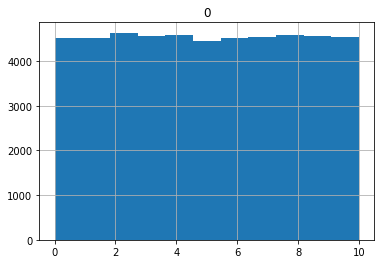

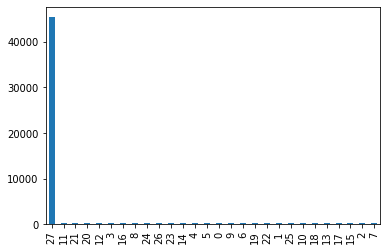

training_label_sizes = list(map(lambda x: [len(x) - 1], training_labels))# confirm sizes are uniformly distributed

sizes_frame = pd.DataFrame(training_label_sizes)

sizes_frame.hist(bins=11)array([[<matplotlib.axes._subplots.AxesSubplot object at 0x7fbe5f453a90>]],

dtype=object)

As expected, sizes are uniformly distributed.

Define first NN classifier with a single hidden layer:

size_classifier = keras.Sequential([

keras.layers.Flatten(input_shape=(21, 21)), # all input images are 21x21 pixels

keras.layers.Dense(21*21, activation='relu'), # set hidden layer to the same size as the input

keras.layers.Dense(MAX_SIZE) # output size is equal to number of classes, one for each size

])

size_classifier.compile(optimizer='adam',

loss=tf.keras.losses.SparseCategoricalCrossentropy(from_logits=True),

metrics=['accuracy'])

size_classifier.summary()Model: "sequential"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

flatten (Flatten) (None, 441) 0

_________________________________________________________________

dense (Dense) (None, 441) 194922

_________________________________________________________________

dense_1 (Dense) (None, 11) 4862

=================================================================

Total params: 199,784

Trainable params: 199,784

Non-trainable params: 0

_________________________________________________________________

Time to train the network. The validation split is set to 5%, which gives 2.5k of validation samples. Since the original data is randomly generated, there is no need to re-shuffle.

The early stop is based on validation loss. There is no saving for the best model, as this is an exploration run.

# train the size classifier

size_history = size_classifier.fit(

training_data, np.asarray(training_label_sizes),

epochs=30, batch_size=128,

validation_split=0.05,

callbacks=[EarlyStopping(monitor='val_loss', min_delta=0.0001, patience=3)]

)

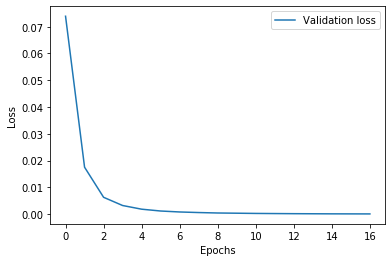

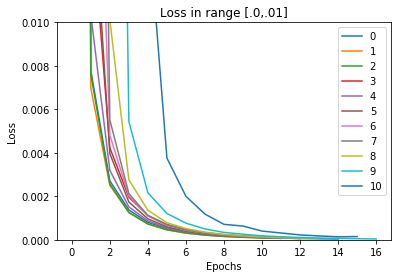

# plot the validation loss

plt.plot(size_history.history['val_loss'], label='Validation loss')

plt.xlabel('Epochs')

plt.ylabel('Loss')

plt.legend(loc="best")

plt.show()Train on 47500 samples, validate on 2500 samples

Epoch 1/30

47500/47500 [==============================] - 3s 56us/sample - loss: 0.4086 - accuracy: 0.9015 - val_loss: 0.0739 - val_accuracy: 0.9920

Epoch 2/30

47500/47500 [==============================] - 2s 45us/sample - loss: 0.0338 - accuracy: 0.9976 - val_loss: 0.0175 - val_accuracy: 1.0000

Epoch 3/30

47500/47500 [==============================] - 2s 47us/sample - loss: 0.0100 - accuracy: 0.9997 - val_loss: 0.0063 - val_accuracy: 1.0000

Epoch 4/30

47500/47500 [==============================] - 3s 57us/sample - loss: 0.0042 - accuracy: 1.0000 - val_loss: 0.0032 - val_accuracy: 1.0000

Epoch 5/30

47500/47500 [==============================] - 2s 52us/sample - loss: 0.0021 - accuracy: 1.0000 - val_loss: 0.0018 - val_accuracy: 1.0000

Epoch 6/30

47500/47500 [==============================] - 2s 50us/sample - loss: 0.0013 - accuracy: 1.0000 - val_loss: 0.0011 - val_accuracy: 1.0000

Epoch 7/30

47500/47500 [==============================] - 3s 54us/sample - loss: 8.1631e-04 - accuracy: 1.0000 - val_loss: 7.8178e-04 - val_accuracy: 1.0000

Epoch 8/30

47500/47500 [==============================] - 3s 54us/sample - loss: 5.6064e-04 - accuracy: 1.0000 - val_loss: 5.6709e-04 - val_accuracy: 1.0000

Epoch 9/30

47500/47500 [==============================] - 2s 48us/sample - loss: 3.9577e-04 - accuracy: 1.0000 - val_loss: 4.0009e-04 - val_accuracy: 1.0000

Epoch 10/30

47500/47500 [==============================] - 2s 47us/sample - loss: 2.8806e-04 - accuracy: 1.0000 - val_loss: 3.1972e-04 - val_accuracy: 1.0000

Epoch 11/30

47500/47500 [==============================] - 2s 49us/sample - loss: 2.1483e-04 - accuracy: 1.0000 - val_loss: 2.3780e-04 - val_accuracy: 1.0000

Epoch 12/30

47500/47500 [==============================] - 2s 50us/sample - loss: 1.6206e-04 - accuracy: 1.0000 - val_loss: 1.8862e-04 - val_accuracy: 1.0000

Epoch 13/30

47500/47500 [==============================] - 2s 49us/sample - loss: 1.2450e-04 - accuracy: 1.0000 - val_loss: 1.4431e-04 - val_accuracy: 1.0000

Epoch 14/30

47500/47500 [==============================] - 2s 48us/sample - loss: 9.6217e-05 - accuracy: 1.0000 - val_loss: 1.1433e-04 - val_accuracy: 1.0000

Epoch 15/30

47500/47500 [==============================] - 2s 48us/sample - loss: 7.5075e-05 - accuracy: 1.0000 - val_loss: 8.4301e-05 - val_accuracy: 1.0000

Epoch 16/30

47500/47500 [==============================] - 2s 49us/sample - loss: 5.8773e-05 - accuracy: 1.0000 - val_loss: 6.9176e-05 - val_accuracy: 1.0000

Epoch 17/30

47500/47500 [==============================] - 3s 53us/sample - loss: 4.6483e-05 - accuracy: 1.0000 - val_loss: 5.5826e-05 - val_accuracy: 1.0000

The training finished pretty fast and the validation accuracy is 1.

It is worth running the classifier on a few examples:

# function to get a text size from a QR image using the pretrained NN

# the original NN is extended with a softmax layer

def get_size(qr_img):

assert qr_img.size == (21, 21)

qr = np.asarray(qr_img, dtype='float') # convert image to numpy array

input = np.asarray([qr]) # NN takes a list of images as an input, make one item numpy array

soft_max_model = keras.Sequential([size_classifier, keras.layers.Softmax()]) # make interpretation of classifier confidence easier

output = soft_max_model.predict(input) # softmax returns a distribution of probabilities for each size

largest_index = np.argmax(output[0], axis=0) # get an index of largest probability

print("Size", largest_index + 1, ", confidence", output[0][largest_index])

test_set = [

'x',

'yo',

'ham',

'four',

'f ive',

'sixsix',

'seven z',

'ei ght x',

'nine hops',

'ten strike',

'hello world'

]

for t in test_set:

get_size(make_qr(t))Size 1 , confidence 0.9999956

Size 2 , confidence 0.9999944

Size 3 , confidence 0.9999914

Size 4 , confidence 0.99998057

Size 5 , confidence 0.9999546

Size 6 , confidence 0.99999034

Size 7 , confidence 0.9999995

Size 8 , confidence 0.9999913

Size 9 , confidence 0.9998647

Size 10 , confidence 0.99928135

Size 11 , confidence 0.99999785

All sizes are correct and confidence is at least three nines. This is a good result.

3. Exploring options by predicting the first and the last characters

NN for size prediction works very well. As the next step of exploration, we can move to character prediction. We start with the first and last characters to see how the model behaves.

Since the same structured networks are trained for different positions, it makes sense to define common functions:

# create training labels for existing texts and required position

def make_labels_for_position(labels, pos):

chars = list(map(lambda x: x[pos] if pos < len(x) else EOI, labels)) # either a letter or EOI

return list(map(lambda x: [ALL_CHAR_CLASSES.index(x)], chars)) # all classes are indexed [0..len(ALL_CHAR_CLASSES))

# define a simple model. Input is a QR image. Output is a character class.

# start with singel hidden layer.

def define_char_classifier():

model = keras.Sequential([

keras.layers.Flatten(input_shape=(21, 21)),

keras.layers.Dense(21*21, activation='relu'),

keras.layers.Dense(len(ALL_CHAR_CLASSES))

])

model.compile(optimizer='adam',

loss=tf.keras.losses.SparseCategoricalCrossentropy(from_logits=True),

metrics=['accuracy'])

return model

# train a model using common options

def train_model(model, data, labels, epochs=75, patience=3):

return model.fit(

data, np.asarray(labels),

epochs=epochs, batch_size=128,

validation_split=0.05,

callbacks=[EarlyStopping(monitor='val_loss' , min_delta=0.0001, patience=patience)]

)

# get an actual letter predicted by a model

# since there is one network per position, no need in position argument

# input is a QR image, output is a letter, for the position the model is trained for

def get_letter(qr_img, model):

assert qr_img.size == (21, 21)

qr = np.asarray(qr_img, dtype='float')

input = np.asarray([qr]) # convert image to NN input

output = model.predict(input) # get a vector of logits for each class

largest_index = np.argmax(output[0], axis=0) # pick an index of most probable class

c = ALL_CHAR_CLASSES[largest_index] # get the actual character

return (c, output[0][largest_index], np.around(output,2)) # return characterStart with the first letter:

# create train labels using the first character of every original text

training_labels_char0 = make_labels_for_position(training_labels, 0)

# create and train model

model = define_char_classifier()

model.summary()

hist0 = train_model(model, training_data, training_labels_char0)Model: "sequential_12"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

flatten_1 (Flatten) (None, 441) 0

_________________________________________________________________

dense_2 (Dense) (None, 441) 194922

_________________________________________________________________

dense_3 (Dense) (None, 28) 12376

=================================================================

Total params: 207,298

Trainable params: 207,298

Non-trainable params: 0

_________________________________________________________________

Train on 47500 samples, validate on 2500 samples

Epoch 1/75

47500/47500 [==============================] - 3s 59us/sample - loss: 2.8364 - accuracy: 0.1838 - val_loss: 2.2639 - val_accuracy: 0.2784

Epoch 2/75

47500/47500 [==============================] - 2s 46us/sample - loss: 1.6036 - accuracy: 0.4995 - val_loss: 0.9522 - val_accuracy: 0.7720

Epoch 3/75

47500/47500 [==============================] - 2s 51us/sample - loss: 0.5686 - accuracy: 0.8949 - val_loss: 0.3345 - val_accuracy: 0.9316

Epoch 4/75

47500/47500 [==============================] - 3s 55us/sample - loss: 0.2226 - accuracy: 0.9574 - val_loss: 0.1639 - val_accuracy: 0.9608

Epoch 5/75

47500/47500 [==============================] - 3s 58us/sample - loss: 0.1177 - accuracy: 0.9832 - val_loss: 0.0919 - val_accuracy: 0.9900

Epoch 6/75

47500/47500 [==============================] - 2s 52us/sample - loss: 0.0576 - accuracy: 0.9992 - val_loss: 0.0425 - val_accuracy: 1.0000

Epoch 7/75

47500/47500 [==============================] - 3s 53us/sample - loss: 0.0287 - accuracy: 1.0000 - val_loss: 0.0237 - val_accuracy: 1.0000

Epoch 8/75

47500/47500 [==============================] - 3s 56us/sample - loss: 0.0170 - accuracy: 1.0000 - val_loss: 0.0147 - val_accuracy: 1.0000

Epoch 9/75

47500/47500 [==============================] - 3s 55us/sample - loss: 0.0110 - accuracy: 1.0000 - val_loss: 0.0098 - val_accuracy: 1.0000

Epoch 10/75

47500/47500 [==============================] - 3s 59us/sample - loss: 0.0075 - accuracy: 1.0000 - val_loss: 0.0069 - val_accuracy: 1.0000

Epoch 11/75

47500/47500 [==============================] - 3s 57us/sample - loss: 0.0054 - accuracy: 1.0000 - val_loss: 0.0050 - val_accuracy: 1.0000

Epoch 12/75

47500/47500 [==============================] - 3s 53us/sample - loss: 0.0039 - accuracy: 1.0000 - val_loss: 0.0037 - val_accuracy: 1.0000

Epoch 13/75

47500/47500 [==============================] - 2s 52us/sample - loss: 0.0029 - accuracy: 1.0000 - val_loss: 0.0028 - val_accuracy: 1.0000

Epoch 14/75

47500/47500 [==============================] - 3s 55us/sample - loss: 0.0022 - accuracy: 1.0000 - val_loss: 0.0021 - val_accuracy: 1.0000

Epoch 15/75

47500/47500 [==============================] - 3s 53us/sample - loss: 0.0017 - accuracy: 1.0000 - val_loss: 0.0017 - val_accuracy: 1.0000

Epoch 16/75

47500/47500 [==============================] - 3s 58us/sample - loss: 0.0013 - accuracy: 1.0000 - val_loss: 0.0013 - val_accuracy: 1.0000

Epoch 17/75

47500/47500 [==============================] - 3s 59us/sample - loss: 0.0010 - accuracy: 1.0000 - val_loss: 9.7294e-04 - val_accuracy: 1.0000

Epoch 18/75

47500/47500 [==============================] - 3s 56us/sample - loss: 7.7575e-04 - accuracy: 1.0000 - val_loss: 7.4393e-04 - val_accuracy: 1.0000

Epoch 19/75

47500/47500 [==============================] - 3s 60us/sample - loss: 5.9778e-04 - accuracy: 1.0000 - val_loss: 5.7824e-04 - val_accuracy: 1.0000

Epoch 20/75

47500/47500 [==============================] - 3s 60us/sample - loss: 4.6365e-04 - accuracy: 1.0000 - val_loss: 4.4954e-04 - val_accuracy: 1.0000

Epoch 21/75

47500/47500 [==============================] - 3s 55us/sample - loss: 3.6141e-04 - accuracy: 1.0000 - val_loss: 3.5091e-04 - val_accuracy: 1.0000

Epoch 22/75

47500/47500 [==============================] - 3s 55us/sample - loss: 2.8391e-04 - accuracy: 1.0000 - val_loss: 2.7763e-04 - val_accuracy: 1.0000

Epoch 23/75

47500/47500 [==============================] - 3s 53us/sample - loss: 2.2476e-04 - accuracy: 1.0000 - val_loss: 2.1912e-04 - val_accuracy: 1.0000

Epoch 24/75

47500/47500 [==============================] - 3s 55us/sample - loss: 1.7838e-04 - accuracy: 1.0000 - val_loss: 1.7540e-04 - val_accuracy: 1.0000

Epoch 25/75

47500/47500 [==============================] - 3s 55us/sample - loss: 1.4194e-04 - accuracy: 1.0000 - val_loss: 1.4003e-04 - val_accuracy: 1.0000

Epoch 26/75

47500/47500 [==============================] - 3s 55us/sample - loss: 1.1334e-04 - accuracy: 1.0000 - val_loss: 1.1256e-04 - val_accuracy: 1.0000

Epoch 27/75

47500/47500 [==============================] - 3s 56us/sample - loss: 9.0879e-05 - accuracy: 1.0000 - val_loss: 8.9801e-05 - val_accuracy: 1.0000

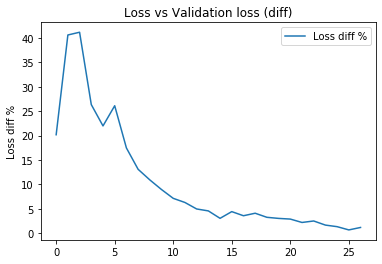

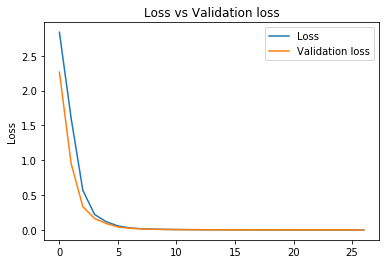

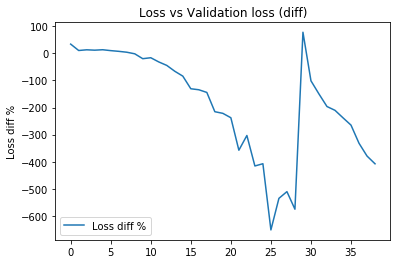

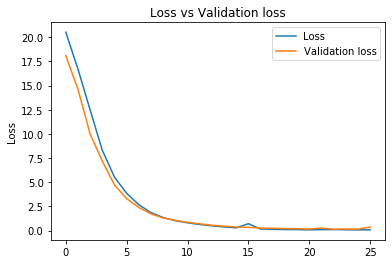

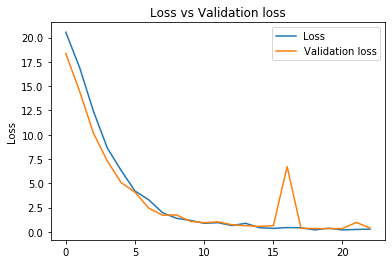

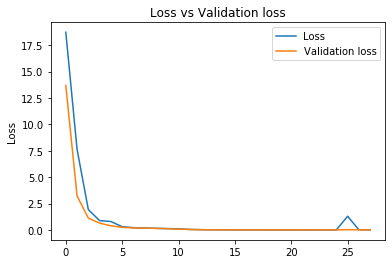

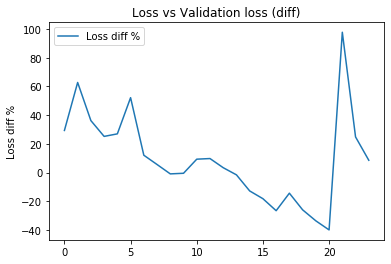

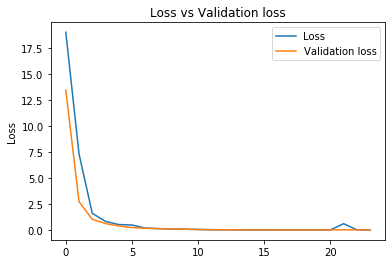

After a short training, validation accuracy is 1. Loss and validation loss are also close:

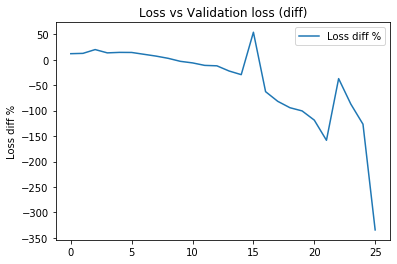

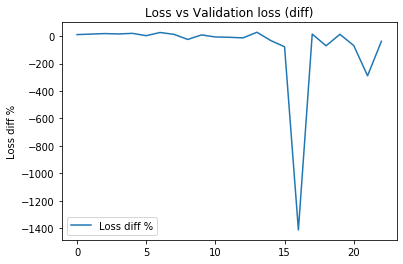

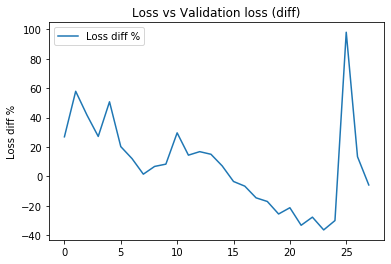

def plot_loss_vs_validation_loss_diff(train_history, abs_output = False):

loss = np.asarray(train_history.history['loss'])

val_loss = np.asarray(train_history.history['val_loss'])

loss_diff = (loss - val_loss)/loss*100 # how much validation loss is different to loss

if abs_output:

loss_diff = np.abs(loss_diff)

plt.plot(loss_diff, label='Loss diff %')

plt.title('Loss vs Validation loss (diff)')

plt.ylabel('Epochs')

plt.ylabel('Loss diff %')

plt.legend(loc="best")

plt.show()

def plot_loss_vs_validation_loss(train_history, abs_output = False):

plt.plot(np.asarray(train_history.history['loss']), label='Loss')

plt.plot(np.asarray(train_history.history['val_loss']), label='Validation loss')

plt.title('Loss vs Validation loss')

plt.ylabel('Epochs')

plt.ylabel('Loss')

plt.legend(loc="best")

plt.show()

plot_loss_vs_validation_loss_diff(hist0)

plot_loss_vs_validation_loss(hist0)

Let’s apply the trained network to a few examples:

# function to try a text sample

# text is converted to a QR image, and then the network is predicting a character

def predict_letters_in_position(list_of_samples, model, postion):

for t in list_of_samples:

qr = make_qr(t)

char_to_predict = t[postion] if len(t) > postion else '#'

softmax_model = keras.Sequential([model, keras.layers.Softmax()])

(c, confidence, _) = get_letter(qr, softmax_model)

print(c == char_to_predict,

'Expected:', char_to_predict,

'Predicted:', c,

'Input:', t,

'Confidence:', confidence)

# use a text set used earlier

predict_letters_in_position(test_set, model, 0)True Expected: x Predicted: x Input: x Confidence: 0.99999416

True Expected: y Predicted: y Input: yo Confidence: 0.99993885

True Expected: h Predicted: h Input: ham Confidence: 0.99992704

True Expected: f Predicted: f Input: four Confidence: 0.99985504

True Expected: f Predicted: f Input: f ive Confidence: 0.999928

True Expected: s Predicted: s Input: sixsix Confidence: 0.9997311

True Expected: s Predicted: s Input: seven z Confidence: 0.99991214

True Expected: e Predicted: e Input: ei ght x Confidence: 0.99977547

True Expected: n Predicted: n Input: nine hops Confidence: 0.9998703

True Expected: t Predicted: t Input: ten strike Confidence: 0.9998048

True Expected: h Predicted: h Input: hello world Confidence: 0.999866

Again, great accuracy and confidence.

Time to try the last character:

# create training labels from existing texts

training_labels_char10 = make_labels_for_position(training_labels, 10)

# build and train a model, and apply to text samples

model = define_char_classifier()

hist10 = train_model(model, training_data, training_labels_char10)

predict_letters_in_position(test_set, model, 10)Train on 47500 samples, validate on 2500 samples

Epoch 1/75

47500/47500 [==============================] - 3s 60us/sample - loss: 0.4419 - accuracy: 0.9071 - val_loss: 0.2932 - val_accuracy: 0.9192

Epoch 2/75

47500/47500 [==============================] - 2s 46us/sample - loss: 0.3016 - accuracy: 0.9155 - val_loss: 0.2711 - val_accuracy: 0.9232

Epoch 3/75

47500/47500 [==============================] - 2s 53us/sample - loss: 0.2629 - accuracy: 0.9220 - val_loss: 0.2296 - val_accuracy: 0.9260

Epoch 4/75

47500/47500 [==============================] - 3s 57us/sample - loss: 0.2224 - accuracy: 0.9305 - val_loss: 0.1973 - val_accuracy: 0.9348

Epoch 5/75

47500/47500 [==============================] - 3s 57us/sample - loss: 0.1786 - accuracy: 0.9419 - val_loss: 0.1556 - val_accuracy: 0.9456

Epoch 6/75

47500/47500 [==============================] - 2s 51us/sample - loss: 0.1386 - accuracy: 0.9553 - val_loss: 0.1253 - val_accuracy: 0.9564

Epoch 7/75

47500/47500 [==============================] - 3s 53us/sample - loss: 0.1036 - accuracy: 0.9691 - val_loss: 0.0963 - val_accuracy: 0.9692

Epoch 8/75

47500/47500 [==============================] - 2s 51us/sample - loss: 0.0764 - accuracy: 0.9790 - val_loss: 0.0735 - val_accuracy: 0.9768

Epoch 9/75

47500/47500 [==============================] - 2s 52us/sample - loss: 0.0545 - accuracy: 0.9874 - val_loss: 0.0557 - val_accuracy: 0.9828

Epoch 10/75

47500/47500 [==============================] - 3s 61us/sample - loss: 0.0391 - accuracy: 0.9918 - val_loss: 0.0469 - val_accuracy: 0.9868

Epoch 11/75

47500/47500 [==============================] - 3s 60us/sample - loss: 0.0278 - accuracy: 0.9949 - val_loss: 0.0325 - val_accuracy: 0.9904

Epoch 12/75

47500/47500 [==============================] - 3s 55us/sample - loss: 0.0192 - accuracy: 0.9972 - val_loss: 0.0254 - val_accuracy: 0.9948

Epoch 13/75

47500/47500 [==============================] - 3s 58us/sample - loss: 0.0133 - accuracy: 0.9987 - val_loss: 0.0193 - val_accuracy: 0.9968

Epoch 14/75

47500/47500 [==============================] - 3s 59us/sample - loss: 0.0094 - accuracy: 0.9993 - val_loss: 0.0156 - val_accuracy: 0.9972

Epoch 15/75

47500/47500 [==============================] - 3s 57us/sample - loss: 0.0065 - accuracy: 0.9997 - val_loss: 0.0120 - val_accuracy: 0.9988

Epoch 16/75

47500/47500 [==============================] - 3s 53us/sample - loss: 0.0047 - accuracy: 0.9999 - val_loss: 0.0109 - val_accuracy: 0.9976

Epoch 17/75

47500/47500 [==============================] - 3s 53us/sample - loss: 0.0035 - accuracy: 0.9999 - val_loss: 0.0083 - val_accuracy: 0.9984

Epoch 18/75

47500/47500 [==============================] - 3s 55us/sample - loss: 0.0027 - accuracy: 1.0000 - val_loss: 0.0065 - val_accuracy: 0.9992

Epoch 19/75

47500/47500 [==============================] - 3s 57us/sample - loss: 0.0019 - accuracy: 1.0000 - val_loss: 0.0061 - val_accuracy: 0.9992

Epoch 20/75

47500/47500 [==============================] - 3s 55us/sample - loss: 0.0015 - accuracy: 1.0000 - val_loss: 0.0049 - val_accuracy: 0.9996

Epoch 21/75

47500/47500 [==============================] - 3s 54us/sample - loss: 0.0012 - accuracy: 1.0000 - val_loss: 0.0040 - val_accuracy: 0.9992

Epoch 22/75

47500/47500 [==============================] - 3s 67us/sample - loss: 8.9789e-04 - accuracy: 1.0000 - val_loss: 0.0041 - val_accuracy: 0.9992

Epoch 23/75

47500/47500 [==============================] - 3s 56us/sample - loss: 6.9052e-04 - accuracy: 1.0000 - val_loss: 0.0028 - val_accuracy: 1.0000

Epoch 24/75

47500/47500 [==============================] - 3s 55us/sample - loss: 5.7058e-04 - accuracy: 1.0000 - val_loss: 0.0029 - val_accuracy: 0.9988

Epoch 25/75

47500/47500 [==============================] - 3s 66us/sample - loss: 4.2955e-04 - accuracy: 1.0000 - val_loss: 0.0022 - val_accuracy: 1.0000

Epoch 26/75

47500/47500 [==============================] - 3s 59us/sample - loss: 3.4260e-04 - accuracy: 1.0000 - val_loss: 0.0026 - val_accuracy: 0.9996

Epoch 27/75

47500/47500 [==============================] - 3s 56us/sample - loss: 2.7358e-04 - accuracy: 1.0000 - val_loss: 0.0017 - val_accuracy: 0.9996

Epoch 28/75

47500/47500 [==============================] - 3s 58us/sample - loss: 2.2452e-04 - accuracy: 1.0000 - val_loss: 0.0014 - val_accuracy: 1.0000

Epoch 29/75

47500/47500 [==============================] - 3s 59us/sample - loss: 1.8053e-04 - accuracy: 1.0000 - val_loss: 0.0012 - val_accuracy: 1.0000

Epoch 30/75

47500/47500 [==============================] - 3s 57us/sample - loss: 0.0066 - accuracy: 0.9982 - val_loss: 0.0015 - val_accuracy: 1.0000

Epoch 31/75

47500/47500 [==============================] - 3s 57us/sample - loss: 4.7402e-04 - accuracy: 1.0000 - val_loss: 9.5541e-04 - val_accuracy: 1.0000

Epoch 32/75

47500/47500 [==============================] - 3s 56us/sample - loss: 3.2779e-04 - accuracy: 1.0000 - val_loss: 8.1996e-04 - val_accuracy: 1.0000

Epoch 33/75

47500/47500 [==============================] - 3s 64us/sample - loss: 2.6733e-04 - accuracy: 1.0000 - val_loss: 7.9233e-04 - val_accuracy: 1.0000

Epoch 34/75

47500/47500 [==============================] - 3s 58us/sample - loss: 2.1291e-04 - accuracy: 1.0000 - val_loss: 6.6085e-04 - val_accuracy: 1.0000

Epoch 35/75

47500/47500 [==============================] - 3s 62us/sample - loss: 1.7700e-04 - accuracy: 1.0000 - val_loss: 5.9818e-04 - val_accuracy: 1.0000

Epoch 36/75

47500/47500 [==============================] - 4s 78us/sample - loss: 1.4876e-04 - accuracy: 1.0000 - val_loss: 5.4285e-04 - val_accuracy: 1.0000

Epoch 37/75

47500/47500 [==============================] - 4s 81us/sample - loss: 1.2318e-04 - accuracy: 1.0000 - val_loss: 5.3188e-04 - val_accuracy: 1.0000

Epoch 38/75

47500/47500 [==============================] - 3s 65us/sample - loss: 1.0261e-04 - accuracy: 1.0000 - val_loss: 4.9138e-04 - val_accuracy: 1.0000

Epoch 39/75

47500/47500 [==============================] - 3s 60us/sample - loss: 8.7857e-05 - accuracy: 1.0000 - val_loss: 4.4589e-04 - val_accuracy: 1.0000

True Expected: # Predicted: # Input: x Confidence: 1.0

True Expected: # Predicted: # Input: yo Confidence: 1.0

True Expected: # Predicted: # Input: ham Confidence: 1.0

True Expected: # Predicted: # Input: four Confidence: 1.0

True Expected: # Predicted: # Input: f ive Confidence: 1.0

True Expected: # Predicted: # Input: sixsix Confidence: 1.0

True Expected: # Predicted: # Input: seven z Confidence: 0.99999964

True Expected: # Predicted: # Input: ei ght x Confidence: 1.0

True Expected: # Predicted: # Input: nine hops Confidence: 0.99999595

True Expected: # Predicted: # Input: ten strike Confidence: 0.99999976

True Expected: d Predicted: d Input: hello world Confidence: 0.99700135

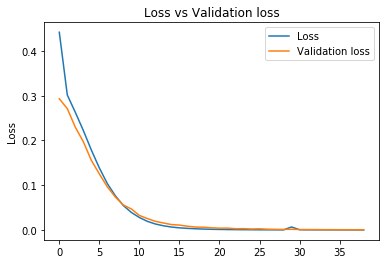

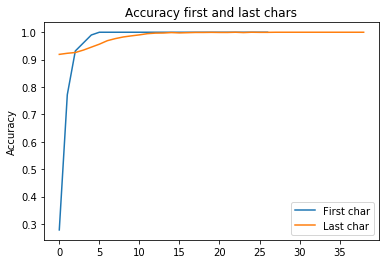

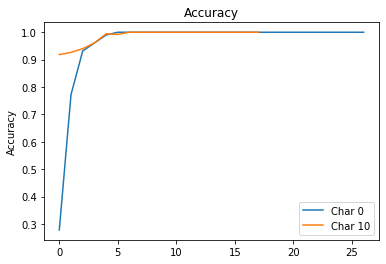

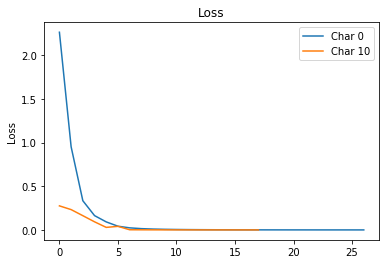

Validation accuracy seems good. Let’s compare metrics for the first and the last characters:

plot_loss_vs_validation_loss_diff(hist10)

plot_loss_vs_validation_loss(hist10)

plt.plot(hist0.history['val_accuracy'], label='First char')

plt.plot(hist10.history['val_accuracy'], label='Last char')

plt.title('Accuracy first and last chars')

plt.ylabel('Epochs')

plt.ylabel('Accuracy')

plt.legend(loc="lower right")

axes = plt.gca()

plt.show()

Accuracy looks suspicious: from the very beginning, the accuracy for the last character is 90%! How so? Let’s see how labels for the last character look like:

frame10 = pd.DataFrame(training_labels_char10)

frame10[0].value_counts().plot(kind='bar')<matplotlib.axes._subplots.AxesSubplot at 0x7fbe4b062810>

Hm. The graph shows the number of samples per character. Character class 27 completely dominates sample space.

# print 27th class

print("Character 27 is", ALL_CHAR_CLASSES[27])Character 27 is #

So the most frequent last character is # - which is the end of input. This should make sense. Since our data is generated in a uniform random fashion, the data set has the same number of text for every length - 1 to 11. Every example with a length of less than 11 has EOI symbols at 11th position. This is a problem with the data, which leads to poor NN performance. From the NN point of view, just classifying every last character as EOD, already gives a high percentage of accuracy.

The second conclusion comes to the number of samples. For the first character, there are 50k uniformly distributed samples. But from the second character and up, the uniform distribution is no longer true. Since the size is uniformly distributed, the number of EOI symbols is accumulated for every next position. This leaves fewer and fewer samples for character classes. For example, let’s see how often ‘a’ is appearing in first and last positions:

frame0 = pd.DataFrame(training_labels_char0)

print("Count 'a' in the first position:", len(frame0[frame0[0] == 0]))

print("Count 'a' in the last position:", len(frame10[frame10[0] == 0]))Count 'a' in the first position: 1860

Count 'a' in the last position: 165

Quite a difference in the order of magnitude. This is expected. Since every string of sizes 1 to 11 has the first character - it is expected to have every class to appear 50k divided by the number of classes: 50k/28 ~ 1785.

This is not the case for the last character. The expected number of texts with size 11 is 50k/11 -> 4545. And there are 27 classes (28 minus one for EOI, since it can’t be there) - 4545/27 = 168.

4. Improving accuracy for the last character

NN performs well for the first character and poorly for the last one. We should improve that. In general, the issue could be in data or NN design or both. We will start with the data.

4.1 Better data

We can try to train a separate network, with a data set of 11 size texts only. For the experiment, the dataset size is 50k/11 - the number of samples per size is the same, but the distribution of character is different, no more EOI. This will allow collecting some info if the dominance of EOI is actually a problem.

(training_data11, training_labels11) = generate_dataset(int(50000/11), min_size=11, max_size=11)

training_labels11_char10 = make_labels_for_position(training_labels11, 10)

model = define_char_classifier()

hist10_with_size_11 = train_model(model, training_data11, training_labels11_char10, epochs=150)

predict_letters_in_position(test_set, model, 10)Generating

Done: 10.0 %

Done: 20.0 %

Done: 30.0 %

Done: 40.0 %

Done: 50.0 %

Done: 60.0 %

Done: 70.0 %

Done: 80.0 %

Done: 90.0 %

Done: 100.0 %

Done: 100 %

Train on 4317 samples, validate on 228 samples

Epoch 1/150

4317/4317 [==============================] - 1s 177us/sample - loss: 3.3678 - accuracy: 0.0477 - val_loss: 3.3240 - val_accuracy: 0.0307

Epoch 2/150

4317/4317 [==============================] - 0s 66us/sample - loss: 3.2260 - accuracy: 0.0737 - val_loss: 3.3186 - val_accuracy: 0.0658

Epoch 3/150

4317/4317 [==============================] - 0s 58us/sample - loss: 3.1283 - accuracy: 0.1161 - val_loss: 3.2285 - val_accuracy: 0.0789

Epoch 4/150

4317/4317 [==============================] - 0s 58us/sample - loss: 3.0084 - accuracy: 0.1663 - val_loss: 3.2488 - val_accuracy: 0.0570

Epoch 5/150

4317/4317 [==============================] - 0s 59us/sample - loss: 2.8719 - accuracy: 0.2080 - val_loss: 3.0817 - val_accuracy: 0.0965

Epoch 6/150

4317/4317 [==============================] - 0s 59us/sample - loss: 2.7087 - accuracy: 0.2391 - val_loss: 2.9870 - val_accuracy: 0.1360

Epoch 7/150

4317/4317 [==============================] - 0s 58us/sample - loss: 2.5340 - accuracy: 0.2912 - val_loss: 2.8377 - val_accuracy: 0.1535

Epoch 8/150

4317/4317 [==============================] - 0s 60us/sample - loss: 2.3747 - accuracy: 0.3336 - val_loss: 2.7823 - val_accuracy: 0.1491

Epoch 9/150

4317/4317 [==============================] - 0s 62us/sample - loss: 2.2087 - accuracy: 0.3702 - val_loss: 2.6128 - val_accuracy: 0.2018

Epoch 10/150

4317/4317 [==============================] - 0s 60us/sample - loss: 2.0330 - accuracy: 0.4341 - val_loss: 2.4352 - val_accuracy: 0.2061

Epoch 11/150

4317/4317 [==============================] - 0s 62us/sample - loss: 1.8556 - accuracy: 0.4925 - val_loss: 2.2842 - val_accuracy: 0.2632

Epoch 12/150

4317/4317 [==============================] - 0s 67us/sample - loss: 1.6990 - accuracy: 0.5469 - val_loss: 2.1971 - val_accuracy: 0.2500

Epoch 13/150

4317/4317 [==============================] - 0s 67us/sample - loss: 1.5564 - accuracy: 0.5830 - val_loss: 2.0539 - val_accuracy: 0.3070

Epoch 14/150

4317/4317 [==============================] - 0s 72us/sample - loss: 1.3966 - accuracy: 0.6477 - val_loss: 1.8881 - val_accuracy: 0.3465

Epoch 15/150

4317/4317 [==============================] - 0s 70us/sample - loss: 1.2522 - accuracy: 0.6933 - val_loss: 1.7991 - val_accuracy: 0.3904

Epoch 16/150

4317/4317 [==============================] - 0s 72us/sample - loss: 1.1229 - accuracy: 0.7385 - val_loss: 1.6400 - val_accuracy: 0.4342

Epoch 17/150

4317/4317 [==============================] - 0s 71us/sample - loss: 1.0108 - accuracy: 0.7788 - val_loss: 1.5657 - val_accuracy: 0.4386

Epoch 18/150

4317/4317 [==============================] - 0s 75us/sample - loss: 0.9020 - accuracy: 0.8135 - val_loss: 1.4222 - val_accuracy: 0.5000

Epoch 19/150

4317/4317 [==============================] - 0s 76us/sample - loss: 0.7954 - accuracy: 0.8552 - val_loss: 1.3541 - val_accuracy: 0.5570

Epoch 20/150

4317/4317 [==============================] - 0s 77us/sample - loss: 0.7122 - accuracy: 0.8731 - val_loss: 1.2750 - val_accuracy: 0.5482

Epoch 21/150

4317/4317 [==============================] - 0s 84us/sample - loss: 0.6331 - accuracy: 0.8972 - val_loss: 1.1936 - val_accuracy: 0.5789

Epoch 22/150

4317/4317 [==============================] - 0s 73us/sample - loss: 0.5631 - accuracy: 0.9189 - val_loss: 1.1300 - val_accuracy: 0.6184

Epoch 23/150

4317/4317 [==============================] - 0s 72us/sample - loss: 0.5075 - accuracy: 0.9338 - val_loss: 1.0307 - val_accuracy: 0.6184

Epoch 24/150

4317/4317 [==============================] - 0s 70us/sample - loss: 0.4497 - accuracy: 0.9537 - val_loss: 0.9534 - val_accuracy: 0.7018

Epoch 25/150

4317/4317 [==============================] - 0s 68us/sample - loss: 0.3994 - accuracy: 0.9618 - val_loss: 0.8998 - val_accuracy: 0.6930

Epoch 26/150

4317/4317 [==============================] - 0s 72us/sample - loss: 0.3595 - accuracy: 0.9736 - val_loss: 0.8685 - val_accuracy: 0.7325

Epoch 27/150

4317/4317 [==============================] - 0s 68us/sample - loss: 0.3234 - accuracy: 0.9782 - val_loss: 0.7939 - val_accuracy: 0.7500

Epoch 28/150

4317/4317 [==============================] - 0s 67us/sample - loss: 0.2904 - accuracy: 0.9824 - val_loss: 0.7635 - val_accuracy: 0.7588

Epoch 29/150

4317/4317 [==============================] - 0s 74us/sample - loss: 0.2588 - accuracy: 0.9907 - val_loss: 0.7200 - val_accuracy: 0.8026

Epoch 30/150

4317/4317 [==============================] - 0s 74us/sample - loss: 0.2360 - accuracy: 0.9926 - val_loss: 0.6916 - val_accuracy: 0.7675

Epoch 31/150

4317/4317 [==============================] - 0s 78us/sample - loss: 0.2093 - accuracy: 0.9956 - val_loss: 0.6719 - val_accuracy: 0.8026

Epoch 32/150

4317/4317 [==============================] - 0s 69us/sample - loss: 0.1904 - accuracy: 0.9965 - val_loss: 0.6259 - val_accuracy: 0.8289

Epoch 33/150

4317/4317 [==============================] - 0s 70us/sample - loss: 0.1736 - accuracy: 0.9977 - val_loss: 0.5968 - val_accuracy: 0.8202

Epoch 34/150

4317/4317 [==============================] - 0s 68us/sample - loss: 0.1598 - accuracy: 0.9981 - val_loss: 0.5789 - val_accuracy: 0.8553

Epoch 35/150

4317/4317 [==============================] - 0s 65us/sample - loss: 0.1458 - accuracy: 0.9984 - val_loss: 0.5585 - val_accuracy: 0.8465

Epoch 36/150

4317/4317 [==============================] - 0s 71us/sample - loss: 0.1312 - accuracy: 0.9993 - val_loss: 0.5115 - val_accuracy: 0.8553

Epoch 37/150

4317/4317 [==============================] - 0s 65us/sample - loss: 0.1192 - accuracy: 0.9998 - val_loss: 0.4978 - val_accuracy: 0.8728

Epoch 38/150

4317/4317 [==============================] - 0s 65us/sample - loss: 0.1105 - accuracy: 0.9995 - val_loss: 0.4853 - val_accuracy: 0.8728

Epoch 39/150

4317/4317 [==============================] - 0s 72us/sample - loss: 0.1033 - accuracy: 0.9995 - val_loss: 0.4869 - val_accuracy: 0.8596

Epoch 40/150

4317/4317 [==============================] - 0s 69us/sample - loss: 0.0948 - accuracy: 0.9995 - val_loss: 0.4531 - val_accuracy: 0.8772

Epoch 41/150

4317/4317 [==============================] - 0s 70us/sample - loss: 0.0871 - accuracy: 1.0000 - val_loss: 0.4480 - val_accuracy: 0.8947

Epoch 42/150

4317/4317 [==============================] - 0s 68us/sample - loss: 0.0807 - accuracy: 1.0000 - val_loss: 0.4217 - val_accuracy: 0.9123

Epoch 43/150

4317/4317 [==============================] - 0s 88us/sample - loss: 0.0748 - accuracy: 1.0000 - val_loss: 0.4156 - val_accuracy: 0.9035

Epoch 44/150

4317/4317 [==============================] - 0s 68us/sample - loss: 0.0699 - accuracy: 1.0000 - val_loss: 0.3985 - val_accuracy: 0.9123

Epoch 45/150

4317/4317 [==============================] - 0s 69us/sample - loss: 0.0654 - accuracy: 1.0000 - val_loss: 0.3836 - val_accuracy: 0.9079

Epoch 46/150

4317/4317 [==============================] - 0s 69us/sample - loss: 0.0610 - accuracy: 1.0000 - val_loss: 0.3815 - val_accuracy: 0.9123

Epoch 47/150

4317/4317 [==============================] - 0s 67us/sample - loss: 0.0578 - accuracy: 1.0000 - val_loss: 0.3640 - val_accuracy: 0.9211

Epoch 48/150

4317/4317 [==============================] - 0s 67us/sample - loss: 0.0539 - accuracy: 1.0000 - val_loss: 0.3510 - val_accuracy: 0.9298

Epoch 49/150

4317/4317 [==============================] - 0s 67us/sample - loss: 0.0503 - accuracy: 1.0000 - val_loss: 0.3391 - val_accuracy: 0.9298

Epoch 50/150

4317/4317 [==============================] - 0s 72us/sample - loss: 0.0472 - accuracy: 1.0000 - val_loss: 0.3295 - val_accuracy: 0.9342

Epoch 51/150

4317/4317 [==============================] - 0s 75us/sample - loss: 0.0444 - accuracy: 1.0000 - val_loss: 0.3253 - val_accuracy: 0.9342

Epoch 52/150

4317/4317 [==============================] - 0s 68us/sample - loss: 0.0421 - accuracy: 1.0000 - val_loss: 0.3217 - val_accuracy: 0.9254

Epoch 53/150

4317/4317 [==============================] - 0s 72us/sample - loss: 0.0398 - accuracy: 1.0000 - val_loss: 0.3170 - val_accuracy: 0.9386

Epoch 54/150

4317/4317 [==============================] - 0s 81us/sample - loss: 0.0381 - accuracy: 1.0000 - val_loss: 0.3051 - val_accuracy: 0.9386

Epoch 55/150

4317/4317 [==============================] - 0s 72us/sample - loss: 0.0357 - accuracy: 1.0000 - val_loss: 0.2931 - val_accuracy: 0.9298

Epoch 56/150

4317/4317 [==============================] - 0s 69us/sample - loss: 0.0338 - accuracy: 1.0000 - val_loss: 0.2929 - val_accuracy: 0.9342

Epoch 57/150

4317/4317 [==============================] - 0s 68us/sample - loss: 0.0321 - accuracy: 1.0000 - val_loss: 0.2870 - val_accuracy: 0.9342

Epoch 58/150

4317/4317 [==============================] - 0s 68us/sample - loss: 0.0307 - accuracy: 1.0000 - val_loss: 0.2890 - val_accuracy: 0.9386

Epoch 59/150

4317/4317 [==============================] - 0s 73us/sample - loss: 0.0291 - accuracy: 1.0000 - val_loss: 0.2695 - val_accuracy: 0.9430

Epoch 60/150

4317/4317 [==============================] - 0s 68us/sample - loss: 0.0278 - accuracy: 1.0000 - val_loss: 0.2661 - val_accuracy: 0.9342

Epoch 61/150

4317/4317 [==============================] - 0s 67us/sample - loss: 0.0264 - accuracy: 1.0000 - val_loss: 0.2644 - val_accuracy: 0.9386

Epoch 62/150

4317/4317 [==============================] - 0s 68us/sample - loss: 0.0252 - accuracy: 1.0000 - val_loss: 0.2619 - val_accuracy: 0.9386

Epoch 63/150

4317/4317 [==============================] - 0s 68us/sample - loss: 0.0241 - accuracy: 1.0000 - val_loss: 0.2538 - val_accuracy: 0.9386

Epoch 64/150

4317/4317 [==============================] - 0s 67us/sample - loss: 0.0230 - accuracy: 1.0000 - val_loss: 0.2477 - val_accuracy: 0.9386

Epoch 65/150

4317/4317 [==============================] - 0s 68us/sample - loss: 0.0219 - accuracy: 1.0000 - val_loss: 0.2456 - val_accuracy: 0.9386

Epoch 66/150

4317/4317 [==============================] - 0s 71us/sample - loss: 0.0210 - accuracy: 1.0000 - val_loss: 0.2373 - val_accuracy: 0.9386

Epoch 67/150

4317/4317 [==============================] - 0s 67us/sample - loss: 0.0202 - accuracy: 1.0000 - val_loss: 0.2345 - val_accuracy: 0.9430

Epoch 68/150

4317/4317 [==============================] - 0s 68us/sample - loss: 0.0193 - accuracy: 1.0000 - val_loss: 0.2317 - val_accuracy: 0.9430

Epoch 69/150

4317/4317 [==============================] - 0s 68us/sample - loss: 0.0185 - accuracy: 1.0000 - val_loss: 0.2297 - val_accuracy: 0.9430

Epoch 70/150

4317/4317 [==============================] - 0s 68us/sample - loss: 0.0178 - accuracy: 1.0000 - val_loss: 0.2233 - val_accuracy: 0.9430

Epoch 71/150

4317/4317 [==============================] - 0s 67us/sample - loss: 0.0170 - accuracy: 1.0000 - val_loss: 0.2252 - val_accuracy: 0.9474

Epoch 72/150

4317/4317 [==============================] - 0s 68us/sample - loss: 0.0164 - accuracy: 1.0000 - val_loss: 0.2202 - val_accuracy: 0.9430

Epoch 73/150

4317/4317 [==============================] - 0s 65us/sample - loss: 0.0157 - accuracy: 1.0000 - val_loss: 0.2169 - val_accuracy: 0.9430

Epoch 74/150

4317/4317 [==============================] - 0s 65us/sample - loss: 0.0152 - accuracy: 1.0000 - val_loss: 0.2107 - val_accuracy: 0.9430

Epoch 75/150

4317/4317 [==============================] - 0s 66us/sample - loss: 0.0146 - accuracy: 1.0000 - val_loss: 0.2088 - val_accuracy: 0.9430

Epoch 76/150

4317/4317 [==============================] - 0s 66us/sample - loss: 0.0140 - accuracy: 1.0000 - val_loss: 0.2087 - val_accuracy: 0.9474

Epoch 77/150

4317/4317 [==============================] - 0s 68us/sample - loss: 0.0136 - accuracy: 1.0000 - val_loss: 0.2041 - val_accuracy: 0.9474

Epoch 78/150

4317/4317 [==============================] - 0s 75us/sample - loss: 0.0131 - accuracy: 1.0000 - val_loss: 0.2036 - val_accuracy: 0.9474

Epoch 79/150

4317/4317 [==============================] - 0s 68us/sample - loss: 0.0126 - accuracy: 1.0000 - val_loss: 0.1982 - val_accuracy: 0.9518

Epoch 80/150

4317/4317 [==============================] - 0s 67us/sample - loss: 0.0122 - accuracy: 1.0000 - val_loss: 0.1977 - val_accuracy: 0.9430

Epoch 81/150

4317/4317 [==============================] - 0s 68us/sample - loss: 0.0117 - accuracy: 1.0000 - val_loss: 0.1929 - val_accuracy: 0.9474

Epoch 82/150

4317/4317 [==============================] - 0s 67us/sample - loss: 0.0113 - accuracy: 1.0000 - val_loss: 0.1895 - val_accuracy: 0.9518

Epoch 83/150

4317/4317 [==============================] - 0s 101us/sample - loss: 0.0109 - accuracy: 1.0000 - val_loss: 0.1879 - val_accuracy: 0.9474

Epoch 84/150

4317/4317 [==============================] - 0s 78us/sample - loss: 0.0105 - accuracy: 1.0000 - val_loss: 0.1843 - val_accuracy: 0.9474

Epoch 85/150

4317/4317 [==============================] - 0s 72us/sample - loss: 0.0102 - accuracy: 1.0000 - val_loss: 0.1891 - val_accuracy: 0.9474

Epoch 86/150

4317/4317 [==============================] - 0s 67us/sample - loss: 0.0098 - accuracy: 1.0000 - val_loss: 0.1777 - val_accuracy: 0.9474

Epoch 87/150

4317/4317 [==============================] - 0s 69us/sample - loss: 0.0096 - accuracy: 1.0000 - val_loss: 0.1806 - val_accuracy: 0.9474

Epoch 88/150

4317/4317 [==============================] - 0s 67us/sample - loss: 0.0093 - accuracy: 1.0000 - val_loss: 0.1760 - val_accuracy: 0.9474

Epoch 89/150

4317/4317 [==============================] - 0s 72us/sample - loss: 0.0089 - accuracy: 1.0000 - val_loss: 0.1787 - val_accuracy: 0.9474

Epoch 90/150

4317/4317 [==============================] - 0s 68us/sample - loss: 0.0086 - accuracy: 1.0000 - val_loss: 0.1720 - val_accuracy: 0.9474

Epoch 91/150

4317/4317 [==============================] - 0s 68us/sample - loss: 0.0083 - accuracy: 1.0000 - val_loss: 0.1710 - val_accuracy: 0.9474

Epoch 92/150

4317/4317 [==============================] - 0s 69us/sample - loss: 0.0081 - accuracy: 1.0000 - val_loss: 0.1697 - val_accuracy: 0.9474

Epoch 93/150

4317/4317 [==============================] - 0s 67us/sample - loss: 0.0079 - accuracy: 1.0000 - val_loss: 0.1662 - val_accuracy: 0.9474

Epoch 94/150

4317/4317 [==============================] - 0s 67us/sample - loss: 0.0076 - accuracy: 1.0000 - val_loss: 0.1641 - val_accuracy: 0.9474

Epoch 95/150

4317/4317 [==============================] - 0s 70us/sample - loss: 0.0074 - accuracy: 1.0000 - val_loss: 0.1645 - val_accuracy: 0.9518

Epoch 96/150

4317/4317 [==============================] - 0s 70us/sample - loss: 0.0071 - accuracy: 1.0000 - val_loss: 0.1657 - val_accuracy: 0.9474

Epoch 97/150

4317/4317 [==============================] - 0s 75us/sample - loss: 0.0069 - accuracy: 1.0000 - val_loss: 0.1620 - val_accuracy: 0.9474

Epoch 98/150

4317/4317 [==============================] - 0s 69us/sample - loss: 0.0067 - accuracy: 1.0000 - val_loss: 0.1634 - val_accuracy: 0.9518

Epoch 99/150

4317/4317 [==============================] - 0s 67us/sample - loss: 0.0065 - accuracy: 1.0000 - val_loss: 0.1582 - val_accuracy: 0.9474

Epoch 100/150

4317/4317 [==============================] - 0s 68us/sample - loss: 0.0063 - accuracy: 1.0000 - val_loss: 0.1550 - val_accuracy: 0.9518

Epoch 101/150

4317/4317 [==============================] - 0s 68us/sample - loss: 0.0061 - accuracy: 1.0000 - val_loss: 0.1540 - val_accuracy: 0.9518

Epoch 102/150

4317/4317 [==============================] - 0s 69us/sample - loss: 0.0060 - accuracy: 1.0000 - val_loss: 0.1543 - val_accuracy: 0.9474

Epoch 103/150

4317/4317 [==============================] - 0s 74us/sample - loss: 0.0058 - accuracy: 1.0000 - val_loss: 0.1492 - val_accuracy: 0.9518

Epoch 104/150

4317/4317 [==============================] - 0s 69us/sample - loss: 0.0056 - accuracy: 1.0000 - val_loss: 0.1495 - val_accuracy: 0.9518

Epoch 105/150

4317/4317 [==============================] - 0s 68us/sample - loss: 0.0055 - accuracy: 1.0000 - val_loss: 0.1502 - val_accuracy: 0.9518

Epoch 106/150

4317/4317 [==============================] - 0s 68us/sample - loss: 0.0053 - accuracy: 1.0000 - val_loss: 0.1466 - val_accuracy: 0.9518

Epoch 107/150

4317/4317 [==============================] - 0s 68us/sample - loss: 0.0052 - accuracy: 1.0000 - val_loss: 0.1467 - val_accuracy: 0.9518

Epoch 108/150

4317/4317 [==============================] - 0s 69us/sample - loss: 0.0050 - accuracy: 1.0000 - val_loss: 0.1439 - val_accuracy: 0.9518

Epoch 109/150

4317/4317 [==============================] - 0s 71us/sample - loss: 0.0049 - accuracy: 1.0000 - val_loss: 0.1421 - val_accuracy: 0.9518

Epoch 110/150

4317/4317 [==============================] - 0s 66us/sample - loss: 0.0047 - accuracy: 1.0000 - val_loss: 0.1427 - val_accuracy: 0.9474

Epoch 111/150

4317/4317 [==============================] - 0s 64us/sample - loss: 0.0046 - accuracy: 1.0000 - val_loss: 0.1434 - val_accuracy: 0.9474

Epoch 112/150

4317/4317 [==============================] - 0s 66us/sample - loss: 0.0045 - accuracy: 1.0000 - val_loss: 0.1413 - val_accuracy: 0.9518

Epoch 113/150

4317/4317 [==============================] - 0s 69us/sample - loss: 0.0043 - accuracy: 1.0000 - val_loss: 0.1397 - val_accuracy: 0.9518

Epoch 114/150

4317/4317 [==============================] - 0s 71us/sample - loss: 0.0042 - accuracy: 1.0000 - val_loss: 0.1416 - val_accuracy: 0.9518

Epoch 115/150

4317/4317 [==============================] - 0s 71us/sample - loss: 0.0041 - accuracy: 1.0000 - val_loss: 0.1357 - val_accuracy: 0.9518

Epoch 116/150

4317/4317 [==============================] - 0s 68us/sample - loss: 0.0040 - accuracy: 1.0000 - val_loss: 0.1359 - val_accuracy: 0.9518

Epoch 117/150

4317/4317 [==============================] - 0s 72us/sample - loss: 0.0039 - accuracy: 1.0000 - val_loss: 0.1377 - val_accuracy: 0.9518

Epoch 118/150

4317/4317 [==============================] - 0s 75us/sample - loss: 0.0038 - accuracy: 1.0000 - val_loss: 0.1331 - val_accuracy: 0.9518

Epoch 119/150

4317/4317 [==============================] - 0s 72us/sample - loss: 0.0037 - accuracy: 1.0000 - val_loss: 0.1327 - val_accuracy: 0.9518

Epoch 120/150

4317/4317 [==============================] - 0s 71us/sample - loss: 0.0036 - accuracy: 1.0000 - val_loss: 0.1339 - val_accuracy: 0.9518

Epoch 121/150

4317/4317 [==============================] - 0s 72us/sample - loss: 0.0035 - accuracy: 1.0000 - val_loss: 0.1334 - val_accuracy: 0.9518

Epoch 122/150

4317/4317 [==============================] - 0s 74us/sample - loss: 0.0034 - accuracy: 1.0000 - val_loss: 0.1301 - val_accuracy: 0.9518

Epoch 123/150

4317/4317 [==============================] - 0s 72us/sample - loss: 0.0033 - accuracy: 1.0000 - val_loss: 0.1290 - val_accuracy: 0.9518

Epoch 124/150

4317/4317 [==============================] - 0s 71us/sample - loss: 0.0033 - accuracy: 1.0000 - val_loss: 0.1264 - val_accuracy: 0.9518

Epoch 125/150

4317/4317 [==============================] - 0s 71us/sample - loss: 0.0032 - accuracy: 1.0000 - val_loss: 0.1277 - val_accuracy: 0.9518

Epoch 126/150

4317/4317 [==============================] - 0s 75us/sample - loss: 0.0031 - accuracy: 1.0000 - val_loss: 0.1259 - val_accuracy: 0.9518

Epoch 127/150

4317/4317 [==============================] - 0s 77us/sample - loss: 0.0030 - accuracy: 1.0000 - val_loss: 0.1272 - val_accuracy: 0.9518

Epoch 128/150

4317/4317 [==============================] - 0s 70us/sample - loss: 0.0029 - accuracy: 1.0000 - val_loss: 0.1249 - val_accuracy: 0.9518

Epoch 129/150

4317/4317 [==============================] - 0s 69us/sample - loss: 0.0029 - accuracy: 1.0000 - val_loss: 0.1252 - val_accuracy: 0.9518

Epoch 130/150

4317/4317 [==============================] - 0s 73us/sample - loss: 0.0028 - accuracy: 1.0000 - val_loss: 0.1254 - val_accuracy: 0.9518

Epoch 131/150

4317/4317 [==============================] - 0s 72us/sample - loss: 0.0027 - accuracy: 1.0000 - val_loss: 0.1207 - val_accuracy: 0.9561

Epoch 132/150

4317/4317 [==============================] - 0s 75us/sample - loss: 0.0027 - accuracy: 1.0000 - val_loss: 0.1218 - val_accuracy: 0.9518

Epoch 133/150

4317/4317 [==============================] - 0s 67us/sample - loss: 0.0026 - accuracy: 1.0000 - val_loss: 0.1205 - val_accuracy: 0.9518

Epoch 134/150

4317/4317 [==============================] - 0s 67us/sample - loss: 0.0025 - accuracy: 1.0000 - val_loss: 0.1175 - val_accuracy: 0.9561

Epoch 135/150

4317/4317 [==============================] - 0s 68us/sample - loss: 0.0025 - accuracy: 1.0000 - val_loss: 0.1187 - val_accuracy: 0.9518

Epoch 136/150

4317/4317 [==============================] - 0s 67us/sample - loss: 0.0024 - accuracy: 1.0000 - val_loss: 0.1182 - val_accuracy: 0.9518

Epoch 137/150

4317/4317 [==============================] - 0s 71us/sample - loss: 0.0024 - accuracy: 1.0000 - val_loss: 0.1177 - val_accuracy: 0.9561

False Expected: # Predicted: a Input: x Confidence: 0.6288551

False Expected: # Predicted: m Input: yo Confidence: 0.96041507

False Expected: # Predicted: a Input: ham Confidence: 0.8889916

False Expected: # Predicted: o Input: four Confidence: 0.8232217

False Expected: # Predicted: a Input: f ive Confidence: 0.9190856

False Expected: # Predicted: z Input: sixsix Confidence: 0.9657479

False Expected: # Predicted: a Input: seven z Confidence: 0.9826301

False Expected: # Predicted: z Input: ei ght x Confidence: 0.9266826

False Expected: # Predicted: a Input: nine hops Confidence: 0.9878347

False Expected: # Predicted: f Input: ten strike Confidence: 0.7795283

True Expected: d Predicted: d Input: hello world Confidence: 0.97773224

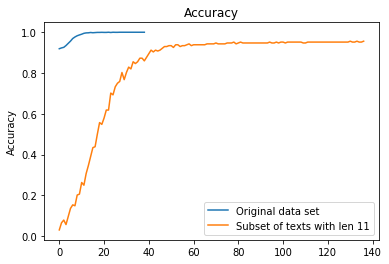

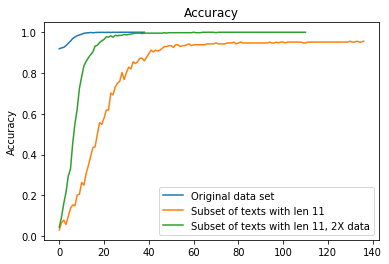

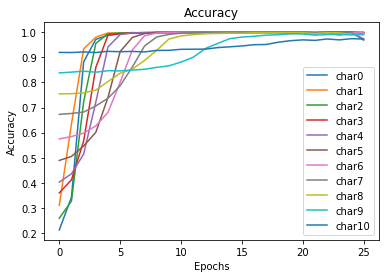

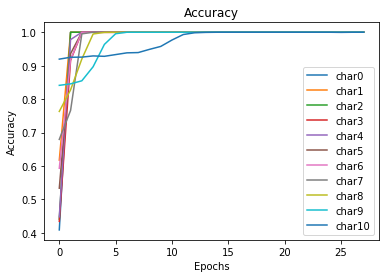

plt.plot(hist10.history['val_accuracy'], label='Original data set')

plt.plot(hist10_with_size_11.history['val_accuracy'], label='Subset of texts with len 11')

plt.title('Accuracy')

plt.ylabel('Epochs')

plt.ylabel('Accuracy')

plt.legend(loc="best")

axes = plt.gca()

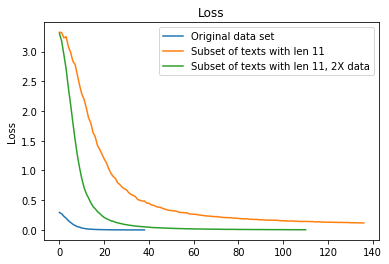

plt.show()

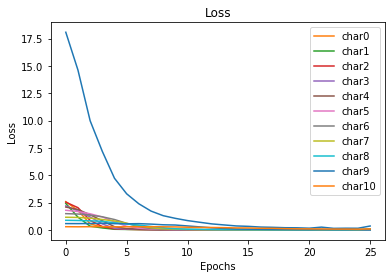

plt.plot(hist10.history['val_loss'], label='Original data set')

plt.plot(hist10_with_size_11.history['val_loss'], label='Subset of texts with len 11')

plt.title('Loss')

plt.ylabel('Epochs')

plt.ylabel('Loss')

plt.legend(loc="best")

plt.show()

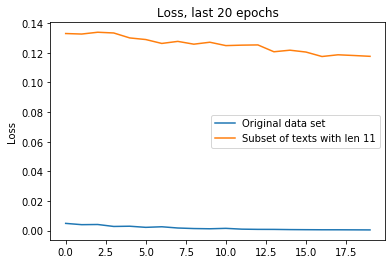

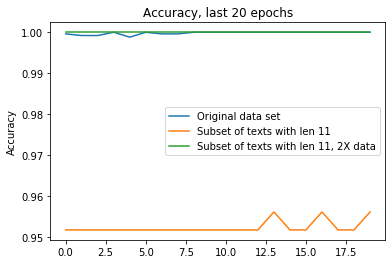

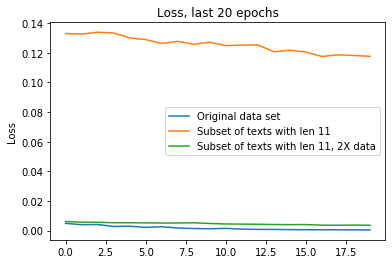

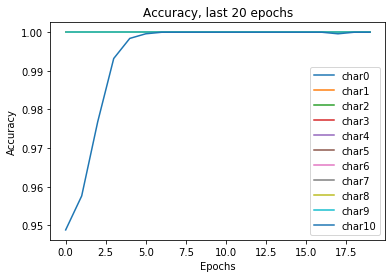

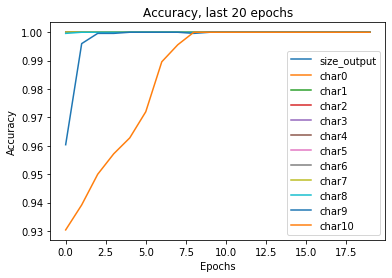

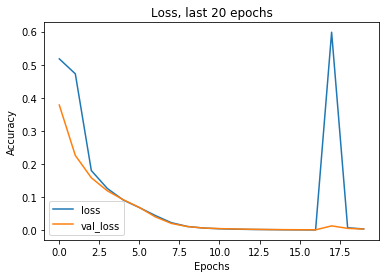

The trend looks as expected, less data did result in less progress in both accuracy and lost. Let’s see how the last 20 epochs behave in both cases:

plt.plot(hist10.history['val_accuracy'][-20:], label='Original data set')

plt.plot(hist10_with_size_11.history['val_accuracy'][-20:], label='Subset of texts with len 11')

plt.title('Accuracy, last 20 epochs')

plt.ylabel('Epochs')

plt.ylabel('Accuracy')

plt.legend(loc="best")

axes = plt.gca()

plt.show()

plt.plot(hist10.history['val_loss'][-20:], label='Original data set')

plt.plot(hist10_with_size_11.history['val_loss'][-20:], label='Subset of texts with len 11')

plt.title('Loss, last 20 epochs')

plt.ylabel('Epochs')

plt.ylabel('Loss')

plt.legend(loc="best")

plt.show()

Not good. The set of 11 length texts is too small to achieve the original performance.

To completely proof this idea, a new dataset could be generated. This dataset has twice more samples, comparing to previous runs.

(training_data11, training_labels11) = generate_dataset(int(50000/11*2), min_size=11, max_size=11) # original dataset size was 50000/11 - 50k samples uniformly distributed over sizes 1..11

training_labels11_char10 = make_labels_for_position(training_labels11, 10)

model = define_char_classifier()

hist10_with_size_11_2x = train_model(model, training_data11, training_labels11_char10, epochs=150)Generating

Done: 10.0 %

Done: 20.0 %

Done: 30.0 %

Done: 40.0 %

Done: 50.0 %

Done: 60.0 %

Done: 70.0 %

Done: 80.0 %

Done: 90.0 %

Done: 100 %

Train on 8635 samples, validate on 455 samples

Epoch 1/150

8635/8635 [==============================] - 1s 117us/sample - loss: 3.3309 - accuracy: 0.0491 - val_loss: 3.3035 - val_accuracy: 0.0440

Epoch 2/150

8635/8635 [==============================] - 0s 51us/sample - loss: 3.1605 - accuracy: 0.0990 - val_loss: 3.1738 - val_accuracy: 0.0945

Epoch 3/150

8635/8635 [==============================] - 0s 51us/sample - loss: 2.9574 - accuracy: 0.1634 - val_loss: 2.9456 - val_accuracy: 0.1604

Epoch 4/150

8635/8635 [==============================] - 0s 55us/sample - loss: 2.6786 - accuracy: 0.2327 - val_loss: 2.7075 - val_accuracy: 0.2154

Epoch 5/150

8635/8635 [==============================] - 1s 64us/sample - loss: 2.3789 - accuracy: 0.3032 - val_loss: 2.3894 - val_accuracy: 0.2945

Epoch 6/150

8635/8635 [==============================] - 1s 63us/sample - loss: 2.0535 - accuracy: 0.4118 - val_loss: 2.1108 - val_accuracy: 0.3297

Epoch 7/150

8635/8635 [==============================] - 1s 62us/sample - loss: 1.7278 - accuracy: 0.5266 - val_loss: 1.8076 - val_accuracy: 0.4571

Epoch 8/150

8635/8635 [==============================] - 1s 68us/sample - loss: 1.4237 - accuracy: 0.6483 - val_loss: 1.5176 - val_accuracy: 0.5516

Epoch 9/150

8635/8635 [==============================] - 1s 65us/sample - loss: 1.1454 - accuracy: 0.7473 - val_loss: 1.2581 - val_accuracy: 0.6242

Epoch 10/150

8635/8635 [==============================] - 1s 64us/sample - loss: 0.9165 - accuracy: 0.8270 - val_loss: 1.0448 - val_accuracy: 0.7253

Epoch 11/150

8635/8635 [==============================] - 1s 68us/sample - loss: 0.7312 - accuracy: 0.8774 - val_loss: 0.8624 - val_accuracy: 0.7824

Epoch 12/150

8635/8635 [==============================] - 1s 64us/sample - loss: 0.5897 - accuracy: 0.9097 - val_loss: 0.7155 - val_accuracy: 0.8352

Epoch 13/150

8635/8635 [==============================] - 1s 71us/sample - loss: 0.4817 - accuracy: 0.9289 - val_loss: 0.6114 - val_accuracy: 0.8571

Epoch 14/150

8635/8635 [==============================] - 1s 76us/sample - loss: 0.3994 - accuracy: 0.9433 - val_loss: 0.5373 - val_accuracy: 0.8747

Epoch 15/150

8635/8635 [==============================] - 1s 74us/sample - loss: 0.3285 - accuracy: 0.9650 - val_loss: 0.4594 - val_accuracy: 0.8901

Epoch 16/150

8635/8635 [==============================] - 1s 70us/sample - loss: 0.2739 - accuracy: 0.9749 - val_loss: 0.3945 - val_accuracy: 0.9033

Epoch 17/150

8635/8635 [==============================] - 1s 62us/sample - loss: 0.2320 - accuracy: 0.9844 - val_loss: 0.3555 - val_accuracy: 0.9319

Epoch 18/150

8635/8635 [==============================] - 1s 63us/sample - loss: 0.1941 - accuracy: 0.9888 - val_loss: 0.3088 - val_accuracy: 0.9363

Epoch 19/150

8635/8635 [==============================] - 1s 60us/sample - loss: 0.1631 - accuracy: 0.9925 - val_loss: 0.2766 - val_accuracy: 0.9495

Epoch 20/150

8635/8635 [==============================] - 1s 59us/sample - loss: 0.1396 - accuracy: 0.9950 - val_loss: 0.2399 - val_accuracy: 0.9582

Epoch 21/150

8635/8635 [==============================] - 1s 65us/sample - loss: 0.1199 - accuracy: 0.9971 - val_loss: 0.2104 - val_accuracy: 0.9648

Epoch 22/150

8635/8635 [==============================] - 1s 60us/sample - loss: 0.1033 - accuracy: 0.9986 - val_loss: 0.1905 - val_accuracy: 0.9780

Epoch 23/150

8635/8635 [==============================] - 1s 63us/sample - loss: 0.0899 - accuracy: 0.9985 - val_loss: 0.1720 - val_accuracy: 0.9758

Epoch 24/150

8635/8635 [==============================] - 1s 65us/sample - loss: 0.0788 - accuracy: 0.9992 - val_loss: 0.1546 - val_accuracy: 0.9824

Epoch 25/150

8635/8635 [==============================] - 1s 62us/sample - loss: 0.0689 - accuracy: 0.9995 - val_loss: 0.1457 - val_accuracy: 0.9758

Epoch 26/150

8635/8635 [==============================] - 1s 62us/sample - loss: 0.0608 - accuracy: 0.9998 - val_loss: 0.1289 - val_accuracy: 0.9846

Epoch 27/150

8635/8635 [==============================] - 1s 72us/sample - loss: 0.0540 - accuracy: 1.0000 - val_loss: 0.1199 - val_accuracy: 0.9824

Epoch 28/150

8635/8635 [==============================] - 1s 73us/sample - loss: 0.0480 - accuracy: 0.9999 - val_loss: 0.1114 - val_accuracy: 0.9846

Epoch 29/150

8635/8635 [==============================] - 1s 68us/sample - loss: 0.0432 - accuracy: 1.0000 - val_loss: 0.1023 - val_accuracy: 0.9846

Epoch 30/150

8635/8635 [==============================] - 1s 68us/sample - loss: 0.0388 - accuracy: 1.0000 - val_loss: 0.0935 - val_accuracy: 0.9890

Epoch 31/150

8635/8635 [==============================] - 1s 63us/sample - loss: 0.0349 - accuracy: 1.0000 - val_loss: 0.0880 - val_accuracy: 0.9868

Epoch 32/150

8635/8635 [==============================] - 1s 66us/sample - loss: 0.0315 - accuracy: 1.0000 - val_loss: 0.0799 - val_accuracy: 0.9890

Epoch 33/150

8635/8635 [==============================] - 1s 67us/sample - loss: 0.0286 - accuracy: 1.0000 - val_loss: 0.0767 - val_accuracy: 0.9912

Epoch 34/150

8635/8635 [==============================] - 1s 60us/sample - loss: 0.0263 - accuracy: 1.0000 - val_loss: 0.0704 - val_accuracy: 0.9934

Epoch 35/150

8635/8635 [==============================] - 1s 59us/sample - loss: 0.0240 - accuracy: 1.0000 - val_loss: 0.0646 - val_accuracy: 0.9956

Epoch 36/150

8635/8635 [==============================] - 1s 61us/sample - loss: 0.0219 - accuracy: 1.0000 - val_loss: 0.0617 - val_accuracy: 0.9956

Epoch 37/150

8635/8635 [==============================] - 1s 59us/sample - loss: 0.0201 - accuracy: 1.0000 - val_loss: 0.0580 - val_accuracy: 0.9956

Epoch 38/150

8635/8635 [==============================] - 1s 59us/sample - loss: 0.0185 - accuracy: 1.0000 - val_loss: 0.0549 - val_accuracy: 0.9934

Epoch 39/150

8635/8635 [==============================] - 1s 65us/sample - loss: 0.0171 - accuracy: 1.0000 - val_loss: 0.0517 - val_accuracy: 0.9956

Epoch 40/150

8635/8635 [==============================] - 1s 65us/sample - loss: 0.0158 - accuracy: 1.0000 - val_loss: 0.0494 - val_accuracy: 0.9956

Epoch 41/150

8635/8635 [==============================] - 1s 63us/sample - loss: 0.0146 - accuracy: 1.0000 - val_loss: 0.0469 - val_accuracy: 0.9956

Epoch 42/150

8635/8635 [==============================] - 1s 62us/sample - loss: 0.0136 - accuracy: 1.0000 - val_loss: 0.0426 - val_accuracy: 0.9956

Epoch 43/150

8635/8635 [==============================] - 1s 60us/sample - loss: 0.0125 - accuracy: 1.0000 - val_loss: 0.0395 - val_accuracy: 0.9956

Epoch 44/150

8635/8635 [==============================] - 1s 66us/sample - loss: 0.0117 - accuracy: 1.0000 - val_loss: 0.0383 - val_accuracy: 0.9956

Epoch 45/150

8635/8635 [==============================] - 1s 68us/sample - loss: 0.0109 - accuracy: 1.0000 - val_loss: 0.0371 - val_accuracy: 0.9956

Epoch 46/150

8635/8635 [==============================] - 1s 71us/sample - loss: 0.0102 - accuracy: 1.0000 - val_loss: 0.0352 - val_accuracy: 0.9956

Epoch 47/150

8635/8635 [==============================] - 1s 61us/sample - loss: 0.0094 - accuracy: 1.0000 - val_loss: 0.0338 - val_accuracy: 0.9956

Epoch 48/150

8635/8635 [==============================] - 1s 61us/sample - loss: 0.0088 - accuracy: 1.0000 - val_loss: 0.0313 - val_accuracy: 0.9978

Epoch 49/150

8635/8635 [==============================] - 1s 58us/sample - loss: 0.0083 - accuracy: 1.0000 - val_loss: 0.0300 - val_accuracy: 0.9956

Epoch 50/150

8635/8635 [==============================] - 0s 58us/sample - loss: 0.0077 - accuracy: 1.0000 - val_loss: 0.0283 - val_accuracy: 0.9978

Epoch 51/150

8635/8635 [==============================] - 1s 59us/sample - loss: 0.0073 - accuracy: 1.0000 - val_loss: 0.0275 - val_accuracy: 0.9978

Epoch 52/150

8635/8635 [==============================] - 0s 56us/sample - loss: 0.0068 - accuracy: 1.0000 - val_loss: 0.0257 - val_accuracy: 0.9978

Epoch 53/150

8635/8635 [==============================] - 0s 56us/sample - loss: 0.0064 - accuracy: 1.0000 - val_loss: 0.0245 - val_accuracy: 0.9978

Epoch 54/150

8635/8635 [==============================] - 0s 56us/sample - loss: 0.0060 - accuracy: 1.0000 - val_loss: 0.0237 - val_accuracy: 0.9978

Epoch 55/150

8635/8635 [==============================] - 1s 59us/sample - loss: 0.0057 - accuracy: 1.0000 - val_loss: 0.0229 - val_accuracy: 0.9978

Epoch 56/150

8635/8635 [==============================] - 1s 59us/sample - loss: 0.0054 - accuracy: 1.0000 - val_loss: 0.0221 - val_accuracy: 0.9978

Epoch 57/150

8635/8635 [==============================] - 1s 58us/sample - loss: 0.0051 - accuracy: 1.0000 - val_loss: 0.0204 - val_accuracy: 0.9978

Epoch 58/150

8635/8635 [==============================] - 1s 62us/sample - loss: 0.0048 - accuracy: 1.0000 - val_loss: 0.0194 - val_accuracy: 0.9978

Epoch 59/150

8635/8635 [==============================] - 1s 61us/sample - loss: 0.0045 - accuracy: 1.0000 - val_loss: 0.0192 - val_accuracy: 0.9978

Epoch 60/150

8635/8635 [==============================] - 1s 62us/sample - loss: 0.0043 - accuracy: 1.0000 - val_loss: 0.0181 - val_accuracy: 0.9978

Epoch 61/150

8635/8635 [==============================] - 1s 59us/sample - loss: 0.0040 - accuracy: 1.0000 - val_loss: 0.0172 - val_accuracy: 1.0000

Epoch 62/150

8635/8635 [==============================] - 1s 62us/sample - loss: 0.0038 - accuracy: 1.0000 - val_loss: 0.0169 - val_accuracy: 0.9978

Epoch 63/150

8635/8635 [==============================] - 0s 56us/sample - loss: 0.0036 - accuracy: 1.0000 - val_loss: 0.0164 - val_accuracy: 0.9978

Epoch 64/150

8635/8635 [==============================] - 0s 56us/sample - loss: 0.0034 - accuracy: 1.0000 - val_loss: 0.0156 - val_accuracy: 0.9978

Epoch 65/150

8635/8635 [==============================] - 0s 57us/sample - loss: 0.0032 - accuracy: 1.0000 - val_loss: 0.0152 - val_accuracy: 1.0000

Epoch 66/150

8635/8635 [==============================] - 0s 56us/sample - loss: 0.0031 - accuracy: 1.0000 - val_loss: 0.0146 - val_accuracy: 1.0000

Epoch 67/150

8635/8635 [==============================] - 1s 58us/sample - loss: 0.0029 - accuracy: 1.0000 - val_loss: 0.0134 - val_accuracy: 1.0000

Epoch 68/150

8635/8635 [==============================] - 1s 59us/sample - loss: 0.0028 - accuracy: 1.0000 - val_loss: 0.0131 - val_accuracy: 1.0000

Epoch 69/150

8635/8635 [==============================] - 1s 61us/sample - loss: 0.0026 - accuracy: 1.0000 - val_loss: 0.0128 - val_accuracy: 1.0000

Epoch 70/150

8635/8635 [==============================] - 0s 57us/sample - loss: 0.0025 - accuracy: 1.0000 - val_loss: 0.0122 - val_accuracy: 1.0000

Epoch 71/150

8635/8635 [==============================] - 0s 58us/sample - loss: 0.0024 - accuracy: 1.0000 - val_loss: 0.0124 - val_accuracy: 0.9978

Epoch 72/150

8635/8635 [==============================] - 0s 57us/sample - loss: 0.0022 - accuracy: 1.0000 - val_loss: 0.0118 - val_accuracy: 1.0000

Epoch 73/150

8635/8635 [==============================] - 1s 59us/sample - loss: 0.0021 - accuracy: 1.0000 - val_loss: 0.0110 - val_accuracy: 1.0000

Epoch 74/150

8635/8635 [==============================] - 1s 58us/sample - loss: 0.0020 - accuracy: 1.0000 - val_loss: 0.0109 - val_accuracy: 1.0000

Epoch 75/150

8635/8635 [==============================] - 1s 59us/sample - loss: 0.0019 - accuracy: 1.0000 - val_loss: 0.0099 - val_accuracy: 1.0000

Epoch 76/150

8635/8635 [==============================] - 1s 58us/sample - loss: 0.0018 - accuracy: 1.0000 - val_loss: 0.0100 - val_accuracy: 1.0000

Epoch 77/150

8635/8635 [==============================] - 0s 58us/sample - loss: 0.0018 - accuracy: 1.0000 - val_loss: 0.0095 - val_accuracy: 1.0000

Epoch 78/150

8635/8635 [==============================] - 1s 58us/sample - loss: 0.0017 - accuracy: 1.0000 - val_loss: 0.0096 - val_accuracy: 1.0000

Epoch 79/150

8635/8635 [==============================] - 0s 58us/sample - loss: 0.0016 - accuracy: 1.0000 - val_loss: 0.0096 - val_accuracy: 1.0000

Epoch 80/150

8635/8635 [==============================] - 0s 57us/sample - loss: 0.0015 - accuracy: 1.0000 - val_loss: 0.0092 - val_accuracy: 1.0000

Epoch 81/150

8635/8635 [==============================] - 1s 58us/sample - loss: 0.0014 - accuracy: 1.0000 - val_loss: 0.0081 - val_accuracy: 1.0000

Epoch 82/150

8635/8635 [==============================] - 0s 56us/sample - loss: 0.0014 - accuracy: 1.0000 - val_loss: 0.0082 - val_accuracy: 1.0000

Epoch 83/150

8635/8635 [==============================] - 0s 58us/sample - loss: 0.0013 - accuracy: 1.0000 - val_loss: 0.0082 - val_accuracy: 1.0000

Epoch 84/150

8635/8635 [==============================] - 1s 59us/sample - loss: 0.0012 - accuracy: 1.0000 - val_loss: 0.0078 - val_accuracy: 1.0000

Epoch 85/150

8635/8635 [==============================] - 0s 58us/sample - loss: 0.0012 - accuracy: 1.0000 - val_loss: 0.0076 - val_accuracy: 1.0000

Epoch 86/150

8635/8635 [==============================] - 0s 58us/sample - loss: 0.0011 - accuracy: 1.0000 - val_loss: 0.0074 - val_accuracy: 1.0000

Epoch 87/150

8635/8635 [==============================] - 1s 60us/sample - loss: 0.0011 - accuracy: 1.0000 - val_loss: 0.0069 - val_accuracy: 1.0000

Epoch 88/150

8635/8635 [==============================] - 1s 62us/sample - loss: 0.0010 - accuracy: 1.0000 - val_loss: 0.0067 - val_accuracy: 1.0000

Epoch 89/150

8635/8635 [==============================] - 0s 58us/sample - loss: 9.8558e-04 - accuracy: 1.0000 - val_loss: 0.0067 - val_accuracy: 1.0000

Epoch 90/150

8635/8635 [==============================] - 1s 59us/sample - loss: 9.4302e-04 - accuracy: 1.0000 - val_loss: 0.0065 - val_accuracy: 1.0000

Epoch 91/150

8635/8635 [==============================] - 1s 58us/sample - loss: 8.9881e-04 - accuracy: 1.0000 - val_loss: 0.0063 - val_accuracy: 1.0000

Epoch 92/150

8635/8635 [==============================] - 1s 60us/sample - loss: 8.5815e-04 - accuracy: 1.0000 - val_loss: 0.0061 - val_accuracy: 1.0000

Epoch 93/150

8635/8635 [==============================] - 0s 57us/sample - loss: 8.1993e-04 - accuracy: 1.0000 - val_loss: 0.0057 - val_accuracy: 1.0000

Epoch 94/150

8635/8635 [==============================] - 1s 58us/sample - loss: 7.8299e-04 - accuracy: 1.0000 - val_loss: 0.0056 - val_accuracy: 1.0000

Epoch 95/150

8635/8635 [==============================] - 0s 56us/sample - loss: 7.4757e-04 - accuracy: 1.0000 - val_loss: 0.0053 - val_accuracy: 1.0000

Epoch 96/150

8635/8635 [==============================] - 1s 59us/sample - loss: 7.1597e-04 - accuracy: 1.0000 - val_loss: 0.0053 - val_accuracy: 1.0000

Epoch 97/150

8635/8635 [==============================] - 0s 57us/sample - loss: 6.8339e-04 - accuracy: 1.0000 - val_loss: 0.0052 - val_accuracy: 1.0000

Epoch 98/150

8635/8635 [==============================] - 1s 59us/sample - loss: 6.5364e-04 - accuracy: 1.0000 - val_loss: 0.0051 - val_accuracy: 1.0000

Epoch 99/150

8635/8635 [==============================] - 1s 58us/sample - loss: 6.2444e-04 - accuracy: 1.0000 - val_loss: 0.0051 - val_accuracy: 1.0000

Epoch 100/150

8635/8635 [==============================] - 1s 60us/sample - loss: 5.9848e-04 - accuracy: 1.0000 - val_loss: 0.0053 - val_accuracy: 1.0000

Epoch 101/150

8635/8635 [==============================] - 0s 58us/sample - loss: 5.7203e-04 - accuracy: 1.0000 - val_loss: 0.0048 - val_accuracy: 1.0000

Epoch 102/150

8635/8635 [==============================] - 1s 65us/sample - loss: 5.4724e-04 - accuracy: 1.0000 - val_loss: 0.0045 - val_accuracy: 1.0000

Epoch 103/150

8635/8635 [==============================] - 1s 68us/sample - loss: 5.2431e-04 - accuracy: 1.0000 - val_loss: 0.0044 - val_accuracy: 1.0000

Epoch 104/150

8635/8635 [==============================] - 1s 59us/sample - loss: 5.0180e-04 - accuracy: 1.0000 - val_loss: 0.0043 - val_accuracy: 1.0000

Epoch 105/150

8635/8635 [==============================] - 1s 59us/sample - loss: 4.8044e-04 - accuracy: 1.0000 - val_loss: 0.0041 - val_accuracy: 1.0000

Epoch 106/150

8635/8635 [==============================] - 0s 57us/sample - loss: 4.5914e-04 - accuracy: 1.0000 - val_loss: 0.0040 - val_accuracy: 1.0000

Epoch 107/150

8635/8635 [==============================] - 1s 59us/sample - loss: 4.4048e-04 - accuracy: 1.0000 - val_loss: 0.0040 - val_accuracy: 1.0000

Epoch 108/150

8635/8635 [==============================] - 1s 67us/sample - loss: 4.2111e-04 - accuracy: 1.0000 - val_loss: 0.0037 - val_accuracy: 1.0000

Epoch 109/150

8635/8635 [==============================] - 1s 66us/sample - loss: 4.0344e-04 - accuracy: 1.0000 - val_loss: 0.0036 - val_accuracy: 1.0000

Epoch 110/150

8635/8635 [==============================] - 1s 65us/sample - loss: 3.8637e-04 - accuracy: 1.0000 - val_loss: 0.0037 - val_accuracy: 1.0000

Epoch 111/150

8635/8635 [==============================] - 1s 62us/sample - loss: 3.7003e-04 - accuracy: 1.0000 - val_loss: 0.0036 - val_accuracy: 1.0000

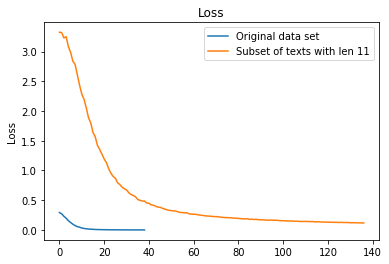

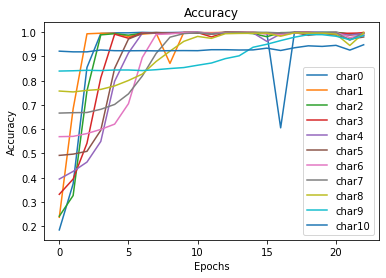

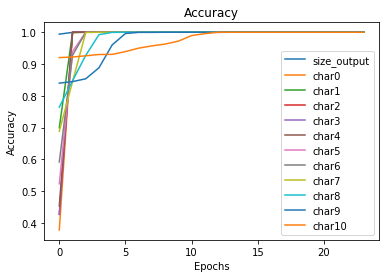

# plot the original data, the previous set of 11 sized texts and the last one, with twice more data

plt.plot(hist10.history['val_accuracy'], label='Original data set')

plt.plot(hist10_with_size_11.history['val_accuracy'], label='Subset of texts with len 11')

plt.plot(hist10_with_size_11_2x.history['val_accuracy'], label='Subset of texts with len 11, 2X data')

plt.title('Accuracy')

plt.ylabel('Epochs')

plt.ylabel('Accuracy')

plt.legend(loc="best")

plt.show()

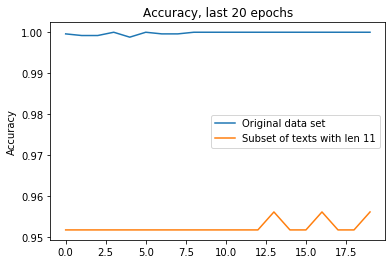

plt.plot(hist10.history['val_accuracy'][-20:], label='Original data set')

plt.plot(hist10_with_size_11.history['val_accuracy'][-20:], label='Subset of texts with len 11')

plt.plot(hist10_with_size_11_2x.history['val_accuracy'][-20:], label='Subset of texts with len 11, 2X data')

plt.title('Accuracy, last 20 epochs')

plt.ylabel('Epochs')

plt.ylabel('Accuracy')

plt.legend(loc="best")

plt.show()

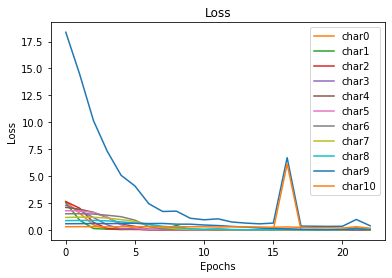

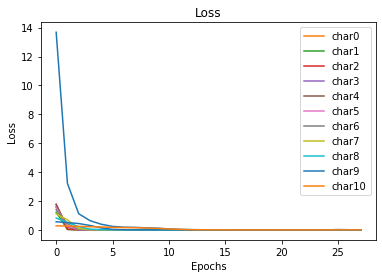

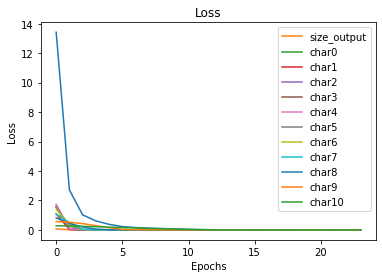

plt.plot(hist10.history['val_loss'], label='Original data set')

plt.plot(hist10_with_size_11.history['val_loss'], label='Subset of texts with len 11')

plt.plot(hist10_with_size_11_2x.history['val_loss'], label='Subset of texts with len 11, 2X data')

plt.title('Loss')

plt.ylabel('Epochs')

plt.ylabel('Loss')

plt.legend(loc="best")

plt.show()

plt.plot(hist10.history['val_loss'][-20:], label='Original data set')

plt.plot(hist10_with_size_11.history['val_loss'][-20:], label='Subset of texts with len 11')

plt.plot(hist10_with_size_11_2x.history['val_loss'][-20:], label='Subset of texts with len 11, 2X data')

plt.title('Loss, last 20 epochs')

plt.ylabel('Epochs')

plt.ylabel('Loss')

plt.legend(loc="best")

plt.show()

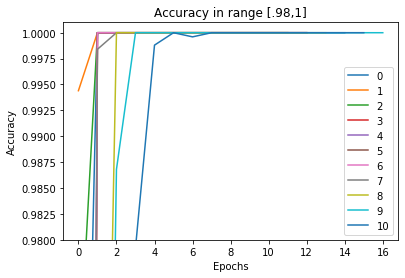

The performance of the model with twice more data is very good. Time to try a different network design.

4.2 Better NN design

The original network has a single hidden layer. The NN may not be flexible enough to recognize all classes correctly. Let’s try to add one more hidden layer and train the network bases on the original data set.

# a new model with extra hidden layer